Slurm Scheduler Overview

How the Slurm scheduler works¶

Savio uses the Slurm scheduler to manage jobs and prioritize jobs amongst the many Savio users.

Overview of Slurm¶

Job submission and scheduling on Savio uses the Slurm software. Slurm controls user access to the resources on Savio and manages the queue of pending jobs based on assigning priorities to jobs and trying to optimize how jobs with different resource requirements can be accommodated. Two key features of Slurm are Fairshare and Backfill.

Fairshare¶

Savio uses Slurm's Fairshare system to prioritize amongst jobs in the queue. Fairshare assigns a numerical priority score to each job based on its characteristics. The key components of the score in Savio's Slurm configuration are the QoS (which ensures that condo jobs are prioritized for the resources purchased by a condo) and recent usage (prioritizing groups and users who have not used Savio much recently over those who have). Usage is quantified based on a standard decay schedule with a half-life of 14 days that downweights usage further in the past. Savio uses a Fair Tree algorithm to prioritize usage based on total usage within each FCA.

Backfill¶

The job(s) at the top of the queue have highest priority. However, if Slurm determines it's possible (based on the resources requested and time limits of the jobs in the queue) for jobs lower in the queue to run without increasing the time it would take to start higher-priority jobs, Slurm will run those lower-priority jobs. This is called backfill. In particular, this can occur when Slurm is collecting resources for multi-node jobs. This feature helps smaller jobs to run and prevents large jobs from hogging the system.

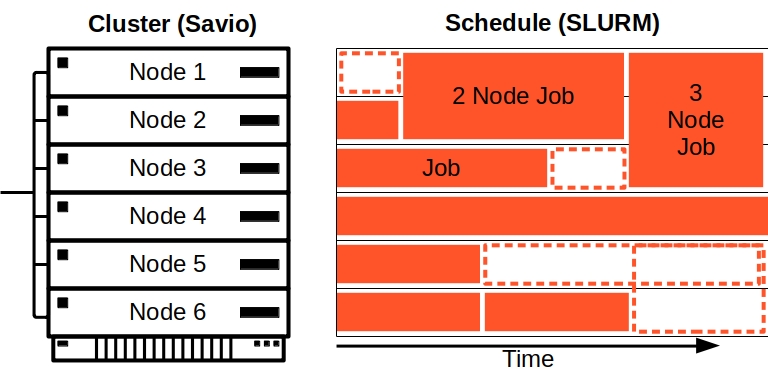

Here's an example of how jobs might be scheduled in a cluster with six nodes. Even though the first node is idle at the moment (see the orange-dashed white box at top of the figure), if you submit a one-node job, it will not be able to run if it has a time limit set to be longer than the available time before Slurm anticipates starting a 2-node job on nodes 1 and 2. A one-node job with a sufficiently short time limit would be able to run on node 1.

You can increase your odds of being able to take advantage of backfill by requesting less time and fewer nodes.

How priorities and queueing on Savio work¶

Savio has two main ways to run jobs -- under a faculty computing allowance (FCA) and under a condo.

Condos¶

Condo usage, aggregated over all users of the condo, is limited to at most the number of nodes purchased by the condo at any given time. Additional jobs will be queued until usage drops below that limit. The pending jobs will be ordered based on the Slurm Fairshare priority, with users with less recent usage prioritized.

Note that in some circumstances, even when the condo's usage is below the limit, a condo job might not start immediately because the partition is fully used, across all condo and FCA users of the given partition. This can occur when a condo has not been fully used and FCA jobs have filled up the partition during that period of limited usage. Condo jobs are prioritized over FCA jobs in the queue and will start as soon as resources become available. Usually any lag in starting condo jobs under this circumstance is limited.

FCA¶

FCA jobs will start when they reach the top of the queue and resources become available as running jobs finish. The queue is ordered based on the Slurm Fairshare priority (specifically the Fair Tree algorithm. The primary influence on this priority is the overall recent usage by all users in the same FCA as the user submitting the job. Jobs from multiple users within an FCA are then influenced by their individual recent usage.

In more detail, usage at the FCA level (summed across all partitions) is ordered across all FCAs, and the priority for a given job depends inversely on that recent usage (based on the FCA the job is using). Similarly, amongst users within an FCA, usage is ordered amongst those users, such that for a given partition, a user with lower recent usage in that partition will have higher priority than one with higher recent usage.

One can see the Fairshare priority by looking at the FairShare column in the output of this command:

sshare -a

Other columns show the recent usage, which is the main factor influencing the FairShare column. Be sure to look at the usage at the level of the FCA when comparing priority across users in different FCAs.