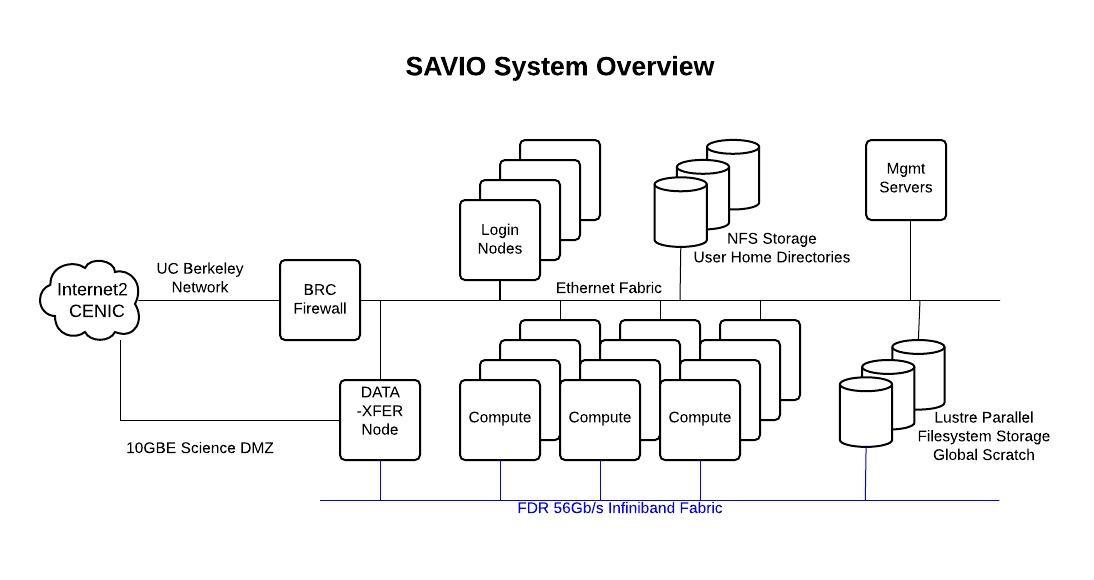

System Overview¶

Summary

The Savio institutional cluster forms the foundation of the Berkeley Research Computing (BRC) Institutional/Condo Cluster. As of April 2020, the system consists of 600 compute nodes in various configurations to support a wide diversity of research applications, with a total of over 15,300 cores and a peak performance of 540 teraFLOPS (CPU) and 1 petaFLOPS (GPU), and offers approximately 3.0 Petabytes of high-speed parallel storage. Savio was launched in May 2014, and is named after Mario Savio, a political activist and a key member of the Berkeley Free Speech Movement.

Please see facilities statement, suitable for inclusion in grant proposals, for a summary of the Savio condo program and more generally the services provided by Berkeley Research Computing.

The video below describes the difference between login, compute, and data transfer nodes:

Compute¶

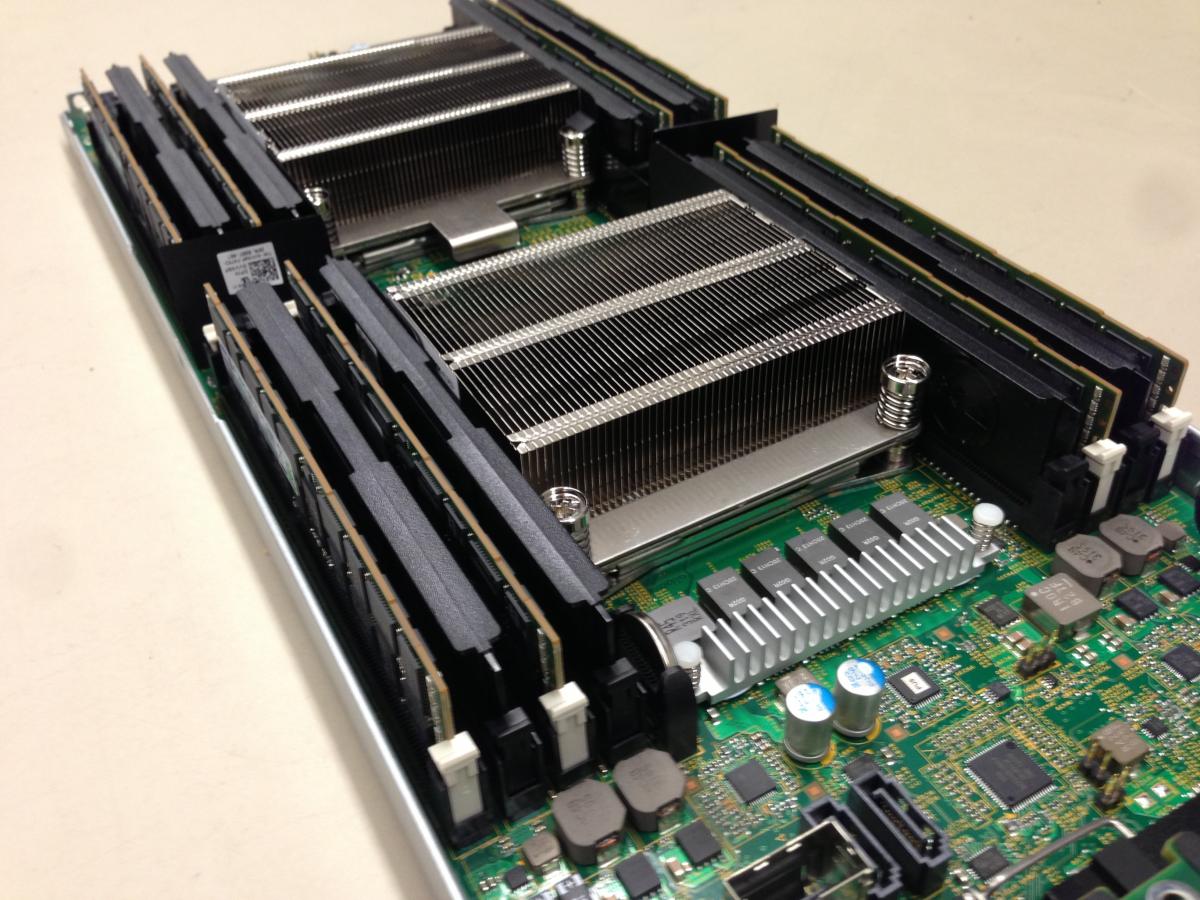

savio1: (Retired in November 2023) Savio1 was the first generation of compute nodes purchased in 2014. Each node was a Dell PowerEdge C6220 server blade equipped with two Intel Xeon 10-core Ivy Bridge processors (20 cores per node) on a single board configured as an SMP unit. The core frequency was 2.5 GHz and supported 8 floating-point operations per clock period with a peak performance of 20.8 GFLOPS/core or 400 GFLOPS/node. Turbo mode enabled processor cores to operate at 3.3 Ghz when sufficient power and cooling are available.

savio1: (Retired in November 2023) Savio1 was the first generation of compute nodes purchased in 2014. Each node was a Dell PowerEdge C6220 server blade equipped with two Intel Xeon 10-core Ivy Bridge processors (20 cores per node) on a single board configured as an SMP unit. The core frequency was 2.5 GHz and supported 8 floating-point operations per clock period with a peak performance of 20.8 GFLOPS/core or 400 GFLOPS/node. Turbo mode enabled processor cores to operate at 3.3 Ghz when sufficient power and cooling are available.

- savio: 160 nodes with 64 GB of 1866 Mhz DDR3 memory

- savio_bigmem: 4 nodes are configured as "BigMem" nodes with 512 GB of 1600 Mhz DDR3 memory.

savio2: Savio2 is the 2nd generation of compute for the Savio cluster purchased in 2015. The general compute pool consists of Lenovo NeXtScale nx360m5 nodes equipped with two Intel Xeon 12-core Haswell processors (24 cores per node) on a single board configured with an SMP unit. The core frequency is 2.3 Ghz and supports 16 floating-point operations per clock period. A small number of nodes have slightly different processor speeds and some have 28 cores per node.

- savio2: 163 nodes with 64 GB of 1866 Mhz DDR3 memory

- savio2_bigmem: 20 nodes are configured as "BigMem" nodes with 128 GB of 2133 Mhz DDR4 memory.

savio2_htc: Purchased as part of the 2nd generation of compute for Savio, the HTC pool is available for users needed to do High-Throughput Computing or serial compute jobs. The pool consists of 20 ea. Lenovo NeXtScale nx360m5 nodes equipped with faster 3.4 Ghz clock speed, but fewer - 12 instead of 24 - core count Intel Haswell processors and 128 GB of 2133 Mhz DDR4 memory to better facilitate serial workloads.

savio2_gpu: (Retired in summer 2024; GPUs no longer accessible) Also purchased as part of the 2nd generation of compute for Savio, the Savio2 GPU nodes are intended for researchers using graphics processing units (GPUs) for computation or image processing. The pool consists of 17 ea. Finetec Computer Supermicro 1U nodes, each equipped with 2 ea. Intel Xeon 4-core 3.0 Ghz Haswell processors (8 total) and 2 ea. Nvidia K80 dual-GPU accelerator boards (4 GPUs total per node). Each GPU offers 2,496 NVIDIA CUDA cores.

savio2_1080ti: This partition has 7 nodes with (4) nVIDIA GTX 1080Ti GPU cards on each node.

savio2_bigmem: This partition has nodes with 128GB of RAM

savio2_knl: This partition of Savio2 has 32 nodes with Intel Knights Landing. These nodes have 64-cores in a single socket. The KNL nodes are special in that it has 16 GB of MCDRAM within the processor, with a bandwidth of 400 GB/s. The MCDRAM are typically configured in cache mode, contact support if you need a different mode.

Savio3: Savio3 is the third generation of compute that began at the end of 2018. Its current composition is:

- savio3: 116 nodes with Skylake processor (2x16 @ 2.1 GHz). 96 GB RAM

- savio3_bigmem: 16 nodes with Skylake processor (2x16 @ 2.1GHz). 384 GB RAM.

- savio3_xlmem: 2 nodes with Skylake processor (2x16 @2.1 GHz). 1.5 TB RAM

- savio3_gpu: 2 node with two nVIDIA Tesla V100 GPU and 5 nodes with four nVIDIA GTX 2080ti GPUs and 3 nodes with 8 nVIDIA GTX 2080ti GPUs.

Savio4: Savio4 is the fourth (and current) generation of compute that began at the end of 2022. All Savio4 nodes are scheduled on a per-core basis rather than Savio's traditional per-node scheduling. Its current composition is:

- savio4_htc: 108 nodes with Intel Xeon Gold 6330 processor (2x28 @ 2.0GHz). 256 or 512 GB RAM.

- savio4_gpu: 24 nodes with eight nVIDIA A5000 GPUs.

More details about these nodes are available in the Savio hardware configuration.

Login Nodes¶

When users login to Savio, they will ssh to hpc.brc.berkeley.edu. This will make a connection through the BRC firewall and they will land on one of the four interactive Login Nodes. Users should use the Login Nodes to prepare their jobs to be run - editing files, file operations, compiling applications, very brief code testing, etc. - and for submitting their jobs via the scheduler. These four Login Nodes have the same specifications as some of the general compute nodes, but the Login Nodes are shared and are not to be used for directly running computations (instead, submit your jobs via the SLURM scheduler, to be run on one or more of the cluster's hundreds of Compute Nodes), nor for transferring data (instead, use the cluster's Data Transfer Node).

Warning

The login nodes are not intended for running computations. Computation on a login node is limited by the system configuration to at most two CPUs and 16 GB memory (RAM) per user. Furthermore, you should limit the time any test code is running on a login node. While there is no hard limit on time, a few minutes could be considered a reasonable length of time. Jobs running on the login nodes could significantly affect all users of the system and may be subject to termination by system administrators.

Savio users can use the 'top' (table of processes) command on the login nodes to display a real-time view of running processes and kernel-managed tasks. The command also provides a system information summary that shows resource utilization, including CPU and memory usage. This command can provide you with useful information on the CPU usage, memory usage, and length of time of the individual processes (i.e., programs) you or others are running on the login nodes.

Storage¶

The cluster uses a two-tier storage strategy. Each user is allotted 50 GB of backed up home directory storage which is hosted by IST's storage group on Network Appliance NFS servers. These servers provide highly available and reliable storage for users to store their persistent data needed to run their compute jobs. A second storage system available to users is a high performance 9 PB Lustre parallel filesystem, provided by a Data Direct Networks Storage Fusion Architecture 12KE storage platform, that is available as Global Scratch. Users with computations that have moderate to heavy I/O storage demands should use Global Scratch when needing to write and read temporary files during the course of a job. There are no limits on the use of Global Scratch; however, this filesystem is NOT backed up and it will be periodically purged of older files in order to insure that there is sufficient working space for users running current calculations.

Interconnect¶

The interconnect for the cluster is a 56Gb/s Mellanox FDR infiniband fabric configured as a two-level fat tree. Edge switches will accommodate up to 24 compute nodes and will have 8 uplinks into the core infiniband switch fabric to achieve a 3:1 blocking factor to provide excellent, cost-effective network performance for MPI and parallel filesystem traffic. A second gigabit ethernet fabric will be used for access to interactive login and compute nodes and for Home Directory storage access.

Data Transfer¶

The data transfer node (DTN) is a BRC server dedicated to performing transfers between data storage resources such as the NFS Home Directory storage and Global Scratch Lustre Parallel Filesystem; and remote storage resources at other sites. High speed data transfers are facilitated by a direct connection to CENIC via UC Berkeley's new Science DMZ network architecture. This server, and not the Login Nodes, should be used for large data transfers so as not to impact the interactive performance for other users on the Login Nodes. Users needing to transfer data to and from Savio should ssh from the Login Nodes or their own computers to the Data Transfer Node, dtn.brc.berkeley.edu, before doing their transfers, or else connect directly to that node from file transfer software running on their own computers.

File Transfer Software¶

For smaller files you can use Secure Copy (SCP) or Secure FTP (SFTP) to transfer files between Savio and another host. For larger files BBCP and GridFTP provide better transfer rates. In addition, Globus Connect - a user-friendly, web-based tool that is especially well suited for large file transfers - makes GridFTP transfers trivial, so users do not have to learn command line options for manual performance tuning. Globus Connect also does automatic performance tuning and has been shown to perform comparable to - or even better (in some cases) than - expert-tuned GridFTP. The Globus endpoint name for the BRC DTN server is ucb#brc

Restrictions¶

In order to keep the data transfer nodes performing optimally for data transfers, we request that users restrict interactive use of these systems to tasks that are related to preparing data for transfer or are directly related to data transfer of some form or fashion. Examples of intended usage would be running Python scripts to download data from a remote source, running client software to load data from a file system into a remote database, or compressing (gzip) or bundling (tar) files in preparation for data transfer. Examples of work that should not be done on the data transfer nodes include running a database on the server to do data processing, or running tests that saturate the nodes' resources. The goal in all this is to maintain data transfer systems that have adequate memory and CPU available for interactive user data transfers.

System Software¶

Operating System: Rocky Linux 8

Cluster Provisioning: Warewulf Cluster Toolkit

Infinband Stack: OFED

Message Passing Library: OpenMPI

Compiler: Intel, GCC

Job Scheduler: SLURM with NHC

Software management: Environment Modules

Data Transfer: Globus Connect

Application Software¶

See information on the available software on Savio for an overview of the types of application software, tools, and utilities provided on Savio.